Databricks DATABRICKS-CERTIFIED-PROFESSIONAL-DATA-ENGINEER dumps - 100% Pass Guarantee!

Vendor: Databricks

Certifications: Databricks Certifications

Exam Name: Databricks Certified Data Engineer Professional

Exam Code: DATABRICKS-CERTIFIED-PROFESSIONAL-DATA-ENGINEER

Total Questions: 120 Q&As ( View Details)

Last Updated: Mar 16, 2025

Note: Product instant download. Please sign in and click My account to download your product.

- Q&As Identical to the VCE Product

- Windows, Mac, Linux, Mobile Phone

- Printable PDF without Watermark

- Instant Download Access

- Download Free PDF Demo

- Includes 365 Days of Free Updates

VCE

- Q&As Identical to the PDF Product

- Windows Only

- Simulates a Real Exam Environment

- Review Test History and Performance

- Instant Download Access

- Includes 365 Days of Free Updates

Databricks DATABRICKS-CERTIFIED-PROFESSIONAL-DATA-ENGINEER Last Month Results

97.4% Pass Rate

97.4% Pass Rate 365 Days Free Update

365 Days Free Update Verified By Professional IT Experts

Verified By Professional IT Experts 24/7 Live Support

24/7 Live Support Instant Download PDF&VCE

Instant Download PDF&VCE 3 Days Preparation Before Test

3 Days Preparation Before Test 18 Years Experience

18 Years Experience 6000+ IT Exam Dumps

6000+ IT Exam Dumps 100% Safe Shopping Experience

100% Safe Shopping Experience

DATABRICKS-CERTIFIED-PROFESSIONAL-DATA-ENGINEER Q&A's Detail

| Exam Code: | DATABRICKS-CERTIFIED-PROFESSIONAL-DATA-ENGINEER |

| Total Questions: | 120 |

| Single & Multiple Choice | 120 |

CertBus Has the Latest DATABRICKS-CERTIFIED-PROFESSIONAL-DATA-ENGINEER Exam Dumps in Both PDF and VCE Format

- Databricks_certbus_DATABRICKS-CERTIFIED-PROFESSIONAL-DATA-ENGINEER_by_Dhanush_100.pdf

- 250.54 KB

- Databricks_certbus_DATABRICKS-CERTIFIED-PROFESSIONAL-DATA-ENGINEER_by_CP_101.pdf

- 291.14 KB

- Databricks_certbus_DATABRICKS-CERTIFIED-PROFESSIONAL-DATA-ENGINEER_by_SNAF_passed_103.pdf

- 418.77 KB

- Databricks_certbus_DATABRICKS-CERTIFIED-PROFESSIONAL-DATA-ENGINEER_by_seccisco_97.pdf

- 269.59 KB

- Databricks_certbus_DATABRICKS-CERTIFIED-PROFESSIONAL-DATA-ENGINEER_by_fcatena_108.pdf

- 255.47 KB

- Databricks_certbus_DATABRICKS-CERTIFIED-PROFESSIONAL-DATA-ENGINEER_by_dotun_olutayo_102.pdf

- 281.48 KB

DATABRICKS-CERTIFIED-PROFESSIONAL-DATA-ENGINEER Online Practice Questions and Answers

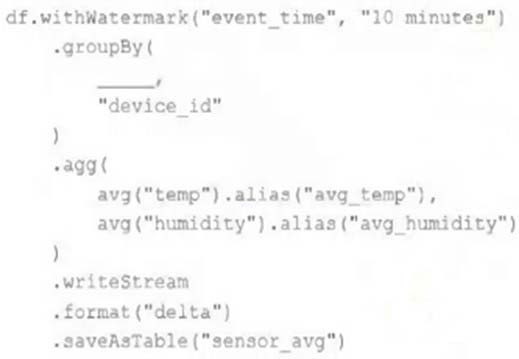

A junior data engineer has been asked to develop a streaming data pipeline with a grouped aggregation using DataFrame df. The pipeline needs to calculate the average humidity and average temperature for each non-overlapping five-minute interval. Events are recorded once per minute per device.

Streaming DataFrame df has the following schema:

"device_id INT, event_time TIMESTAMP, temp FLOAT, humidity FLOAT"

Code block:

Choose the response that correctly fills in the blank within the code block to complete this task.

A. to_interval("event_time", "5 minutes").alias("time")

B. window("event_time", "5 minutes").alias("time")

C. "event_time"

D. window("event_time", "10 minutes").alias("time")

E. lag("event_time", "10 minutes").alias("time")

The data science team has requested assistance in accelerating queries on free form text from user reviews. The data is currently stored in Parquet with the below schema:

item_id INT, user_id INT, review_id INT, rating FLOAT, review STRING

The review column contains the full text of the review left by the user. Specifically, the data science team is looking to identify if any of 30 key words exist in this field. A junior data engineer suggests converting this data to Delta Lake will improve query performance.

Which response to the junior data engineer s suggestion is correct?

A. Delta Lake statistics are not optimized for free text fields with high cardinality.

B. Text data cannot be stored with Delta Lake.

C. ZORDER ON review will need to be run to see performance gains.

D. The Delta log creates a term matrix for free text fields to support selective filtering.

E. Delta Lake statistics are only collected on the first 4 columns in a table.

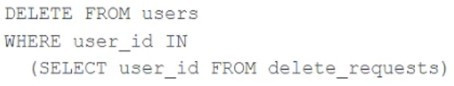

The data governance team is reviewing code used for deleting records for compliance with GDPR. They note the following logic is used to delete records from the Delta Lake table named users.

Assuming that user_id is a unique identifying key and that delete_requests contains all users that have requested deletion, which statement describes whether successfully executing the above logic guarantees that the records to be deleted are no longer accessible and why?

A. Yes; Delta Lake ACID guarantees provide assurance that the delete command succeeded fully and permanently purged these records.

B. No; the Delta cache may return records from previous versions of the table until the cluster is restarted.

C. Yes; the Delta cache immediately updates to reflect the latest data files recorded to disk.

D. No; the Delta Lake delete command only provides ACID guarantees when combined with the merge into command.

E. No; files containing deleted records may still be accessible with time travel until a vacuum command is used to remove invalidated data files.

A Databricks SQL dashboard has been configured to monitor the total number of records present in a collection of Delta Lake tables using the following query pattern:

SELECT COUNT (*) FROM table-

Which of the following describes how results are generated each time the dashboard is updated?

A. The total count of rows is calculated by scanning all data files

B. The total count of rows will be returned from cached results unless REFRESH is run

C. The total count of records is calculated from the Delta transaction logs

D. The total count of records is calculated from the parquet file metadata

E. The total count of records is calculated from the Hive metastore

The view updates represents an incremental batch of all newly ingested data to be inserted or updated in the customers table.

The following logic is used to process these records.

MERGE INTO customers

USING (

SELECT updates.customer_id as merge_ey, updates .*

FROM updates

UNION ALL

SELECT NULL as merge_key, updates .*

FROM updates JOIN customers

ON updates.customer_id = customers.customer_id

WHERE customers.current = true AND updates.address <> customers.address

) staged_updates

ON customers.customer_id = mergekey

WHEN MATCHED AND customers. current = true AND customers.address <> staged_updates.address THEN

UPDATE SET current = false, end_date = staged_updates.effective_date

WHEN NOT MATCHED THEN

INSERT (customer_id, address, current, effective_date, end_date)

VALUES (staged_updates.customer_id, staged_updates.address, true, staged_updates.effective_date, null)

Which statement describes this implementation?

A. The customers table is implemented as a Type 2 table; old values are overwritten and new customers are appended.

B. The customers table is implemented as a Type 1 table; old values are overwritten by new values and no history is maintained.

C. The customers table is implemented as a Type 2 table; old values are maintained but marked as no longer current and new values are inserted.

D. The customers table is implemented as a Type 0 table; all writes are append only with no changes to existing values.

Add Comments

Success Stories

- France

- Stein

- Mar 22, 2025

- Rating: 5.0 / 5.0

![]()

Great Read, everything is clear and precise. I always like to read over the new dumps as it is always good to refresh. Again this is a great study guide, it explains everything clearly, and is written in a way that really get the concepts across.

- NY

- Pass

- Mar 20, 2025

- Rating: 5.0 / 5.0

SO HELPFUL. I didn't study anything but this for a month. This dumps + my 2 year working experience helped me pass on my first attempt!

- Singapore

- Teressa

- Mar 19, 2025

- Rating: 4.2 / 5.0

![]()

Wonderful dumps. I really appreciated this dumps with so many new questions and update so quickly. Recommend strongly.

- Turkey

- Baines

- Mar 18, 2025

- Rating: 4.6 / 5.0

![]()

dumps is valid.

- United States

- Donn

- Mar 16, 2025

- Rating: 4.7 / 5.0

![]()

This dumps is still very valid, I have cleared the written exams passed today. Recommend.

- NY

- BT

- Mar 16, 2025

- Rating: 5.0 / 5.0

They are really great site. I bought the wrong product by chance and contact them immediately. They said usually they does not change the product if the buyer purchase the wrong product for their own reason but they still help me out of that. They send me the right exam I need! Thanks so much, guys. You saved me. I really recommend you guys to all my fellows.

- Sri Lanka

- Branden

- Mar 14, 2025

- Rating: 5.0 / 5.0

![]()

I passed. Good luck to you.

- Venezuela

- Arevalo

- Mar 14, 2025

- Rating: 5.0 / 5.0

Thanks god and thank you all. 100% valid. all the other questions are included in this file.

- US

- N1

- Mar 13, 2025

- Rating: 5.0 / 5.0

Save your money on expensive study guides or online classes courses. Use this dumps, it will be more helpful if you want to pass the exam on your first try!!!

- London

- Betty

- Mar 13, 2025

- Rating: 5.0 / 5.0

The dumps is great, they contain a very good knowledge about the exam. However, most of the materials are the same from the previous version. There are some new questions, and the organization of the pattern is much better than the older one. I'd say this dumps may contain 15-20 percent new materials, the rest is almost identical to the old one.

Databricks DATABRICKS-CERTIFIED-PROFESSIONAL-DATA-ENGINEER exam official information: The Databricks Certified Data Engineer Professional certification exam assesses an individual’s ability to use Databricks to perform advanced data engineering tasks.

Printable PDF

Printable PDF