Microsoft DP-203 dumps - 100% Pass Guarantee!

Vendor: Microsoft

Certifications: Microsoft Certifications

Exam Name: Data Engineering on Microsoft Azure

Exam Code: DP-203

Total Questions: 398 Q&As ( View Details)

Last Updated: Mar 12, 2025

Note: Product instant download. Please sign in and click My account to download your product.

- Q&As Identical to the VCE Product

- Windows, Mac, Linux, Mobile Phone

- Printable PDF without Watermark

- Instant Download Access

- Download Free PDF Demo

- Includes 365 Days of Free Updates

VCE

- Q&As Identical to the PDF Product

- Windows Only

- Simulates a Real Exam Environment

- Review Test History and Performance

- Instant Download Access

- Includes 365 Days of Free Updates

Microsoft DP-203 Last Month Results

96.3% Pass Rate

96.3% Pass Rate 365 Days Free Update

365 Days Free Update Verified By Professional IT Experts

Verified By Professional IT Experts 24/7 Live Support

24/7 Live Support Instant Download PDF&VCE

Instant Download PDF&VCE 3 Days Preparation Before Test

3 Days Preparation Before Test 18 Years Experience

18 Years Experience 6000+ IT Exam Dumps

6000+ IT Exam Dumps 100% Safe Shopping Experience

100% Safe Shopping Experience

DP-203 Q&A's Detail

| Exam Code: | DP-203 |

| Total Questions: | 398 |

| Single & Multiple Choice | 244 |

| Drag Drop | 39 |

| Hotspot | 115 |

| Testlet | 2 |

CertBus Has the Latest DP-203 Exam Dumps in Both PDF and VCE Format

- Microsoft_certbus_DP-203_by_Jorge_Allen_Adajar_310.pdf

- 434.69 KB

- Microsoft_certbus_DP-203_by_seepss_353.pdf

- 341.06 KB

- Microsoft_certbus_DP-203_by_Xtreme_340.pdf

- 384.51 KB

- Microsoft_certbus_DP-203_by_lucas_nyeinchan_366.pdf

- 253.68 KB

- Microsoft_certbus_DP-203_by_Jellal_369.pdf

- 386.13 KB

- Microsoft_certbus_DP-203_by_Jaiben,_Cochin_317.pdf

- 245.73 KB

DP-203 Online Practice Questions and Answers

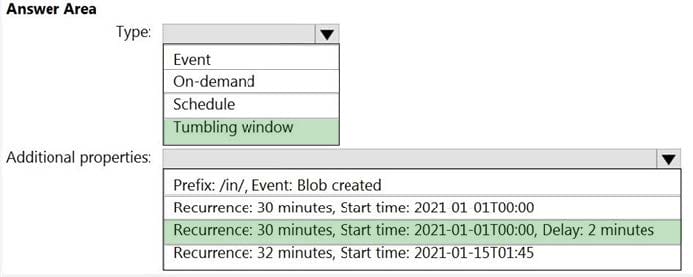

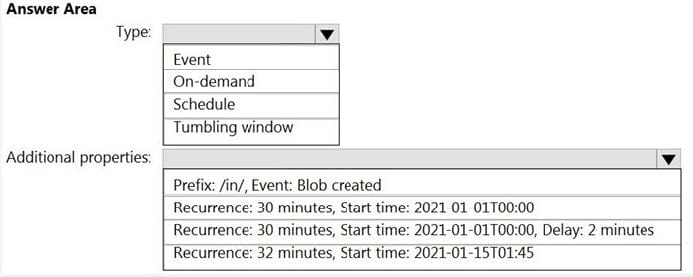

HOTSPOT

You build an Azure Data Factory pipeline to move data from an Azure Data Lake Storage Gen2 container to a database in an Azure Synapse Analytics dedicated SQL pool.

Data in the container is stored in the following folder structure.

/in/{YYYY}/{MM}/{DD}/{HH}/{mm}

The earliest folder is /in/2021/01/01/00/00. The latest folder is /in/2021/01/15/01/45.

You need to configure a pipeline trigger to meet the following requirements:

Existing data must be loaded.

Data must be loaded every 30 minutes.

Late-arriving data of up to two minutes must he included in the load for the time at which the data should have arrived.

How should you configure the pipeline trigger? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Hot Area:

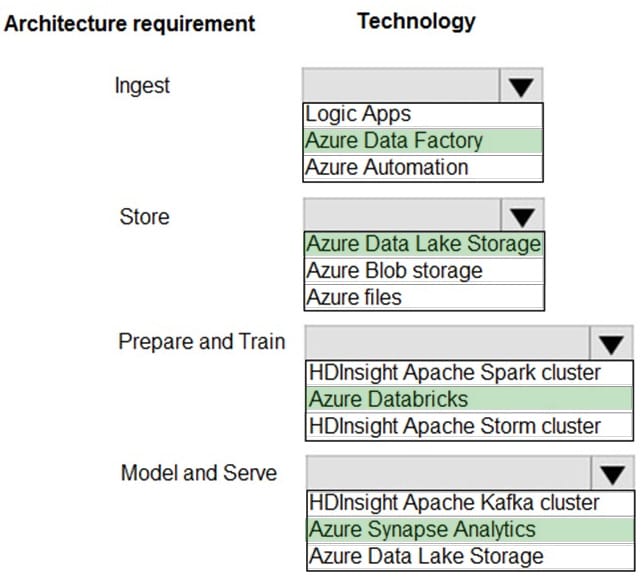

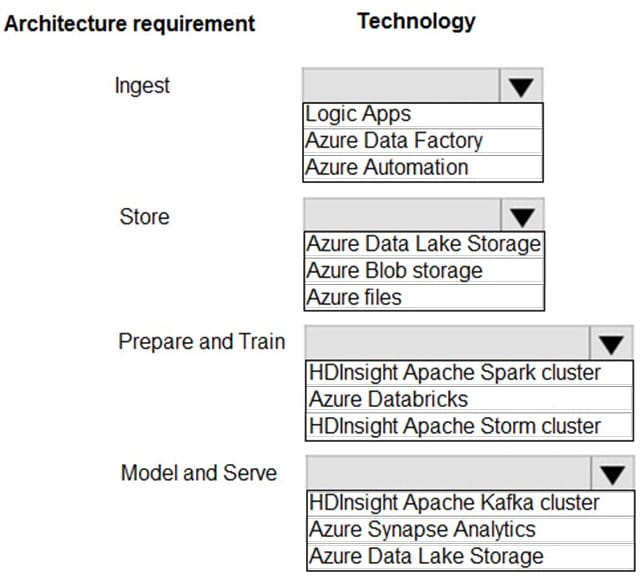

HOTSPOT

A company plans to use Platform-as-a-Service (PaaS) to create the new data pipeline process. The process must meet the following requirements:

Ingest:

1.

Access multiple data sources.

2.

Provide the ability to orchestrate workflow.

3.

Provide the capability to run SQL Server Integration Services packages.

Store:

1.

Optimize storage for big data workloads.

2.

Provide encryption of data at rest.

3.

Operate with no size limits.

Prepare and Train:

1.

Provide a fully-managed and interactive workspace for exploration and visualization.

2.

Provide the ability to program in R, SQL, Python, Scala, and Java.

3.

Provide seamless user authentication with Azure Active Directory.

Model and Serve:

1.

Implement native columnar storage.

2.

Support for the SQL language

3.

Provide support for structured streaming.

You need to build the data integration pipeline.

Which technologies should you use? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Hot Area:

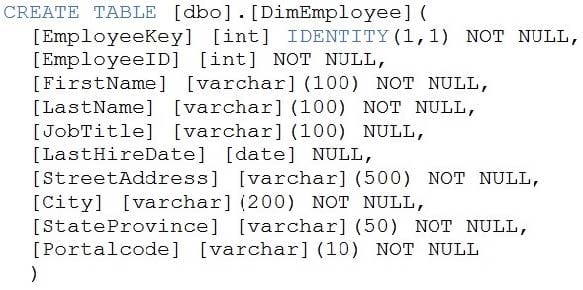

You have a table in an Azure Synapse Analytics dedicated SQL pool. The table was created by using the following Transact-SQL statement.

You need to alter the table to meet the following requirements:

Ensure that users can identify the current manager of employees.

Support creating an employee reporting hierarchy for your entire company.

Provide fast lookup of the managers' attributes such as name and job title.

Which column should you add to the table?

A. [ManagerEmployeeID] [int] NULL

B. [ManagerEmployeeID] [smallint] NULL

C. [ManagerEmployeeKey] [int] NULL

D. [ManagerName] [varchar](200) NULL

You build a data warehouse in an Azure Synapse Analytics dedicated SQL pool.

Analysts write a complex SELECT query that contains multiple JOIN and CASE statements to transform data for use in inventory reports. The inventory reports will use the data and additional WHERE parameters depending on the report. The

reports will be produced once daily.

You need to implement a solution to make the dataset available for the reports. The solution must minimize query times.

What should you implement?

A. an ordered clustered columnstore index

B. a materialized view

C. result set caching

D. a replicated table

You are designing the folder structure for an Azure Data Lake Storage Gen2 account. You identify the following usage patterns:

1.

Users will query data by using Azure Synapse Analytics serverless SQL pools and Azure Synapse Analytics serverless Apache Spark pods.

2.

Most queries will include a filter on the current year or week.

3.

Data will be secured by data source.

You need to recommend a folder structure that meets the following requirements:

1.

Supports the usage patterns

2.

Simplifies folder security

3.

Minimizes query times

Which folder structure should you recommend?

A. \DataSource\SubjectArea\YYYY\WW\FileData_YYYY_MM_DD.parquet

B. \DataSource\SubjectArea\YYYY-WW\FileData_YYYY_MM_DD.parquet

C. DataSource\SubjectArea\WW\YYYY\FileData_YYYY_MM_DD.parquet

D. \YYYY\WW\DataSource\SubjectArea\FileData_YYYY_MM_DD.parquet

E. WW\YYYY\SubjectArea\DataSource\FileData_YYYY_MM_DD.parquet

Add Comments

Success Stories

- United Kingdom

- Ian

- Mar 19, 2025

- Rating: 4.5 / 5.0

![]()

Paas my exam today. Valid dumps. Nice job!

- India

- zuher

- Mar 19, 2025

- Rating: 4.9 / 5.0

![]()

the content update quickly, there are many new questions in this dumps. thanks very much.

- Rwanda

- Wanda

- Mar 18, 2025

- Rating: 4.5 / 5.0

![]()

Dump is still valid, I just passed my DP-203 exam today. Thanks to you all.

- United States

- Talon

- Mar 18, 2025

- Rating: 4.3 / 5.0

![]()

Still valid!! 97%

- United States

- Donn

- Mar 16, 2025

- Rating: 4.7 / 5.0

![]()

This dumps is still very valid, I have cleared the written exams passed today. Recommend.

- Saudi Arabia

- Alvin

- Mar 15, 2025

- Rating: 4.4 / 5.0

![]()

I'm so glad that I have chosen you as my assistant with my DP-203 exam. I passed my exam. Full scored. I will recommend to friends.

- Columbia

- Dustin

- Mar 14, 2025

- Rating: 5.0 / 5.0

Unlike other materials, this is not only practice question. One of my friend took the exam and told me they are really actual exam questions. Although they have so many questions (over a thousand) in the material and you need lots of time to go over the whole material, it's worthy. I strongly recommend this.

- Michigan

- Jason

- Mar 13, 2025

- Rating: 5.0 / 5.0

I'm really glad I had starting dealing with this first before starting my DP-203 exam. They did a great job in being clear and concise without deviating. They cover the domains in more detail in a straight to point approach without dragging out in stories. I also feel that the practice exams are very helpful as it has helped me narrow down weaker areas that need more time to focus on.

- MC

- Carl

- Mar 13, 2025

- Rating: 5.0 / 5.0

I used this as my primary study guide for the DP-203 exam. The included practice questions were outstanding. Overall, I really liked these dumps. It had good coverage on each of the domains. But the only thing I felt not comfortable with is sometimes it was too verbose when explaining topics and they did not give an explanation to each question.

- United States

- KP

- Mar 13, 2025

- Rating: 5.0 / 5.0

![]()

Very easy read. Bought the dumps a little over a month ago, read this question by question, attend to an online course and passed the CISSP exam last Thursday. Did not use any other book in my study.

Microsoft DP-203 exam official information: As a candidate for this exam, you should have subject matter expertise in integrating, transforming, and consolidating data from various structured, unstructured, and streaming data systems into a suitable schema for building analytics solutions.

Printable PDF

Printable PDF