DATABRICKS-MACHINE-LEARNING-PROFESSIONAL Online Practice Questions and Answers

A machine learning engineer is monitoring categorical input variables for a production machine learning application. The engineer believes that missing values are becoming more prevalent in more recent data for a particular value in one of

the categorical input variables.

Which of the following tools can the machine learning engineer use to assess their theory?

A. Kolmogorov-Smirnov (KS) test

B. One-way Chi-squared Test

C. Two-way Chi-squared Test

D. Jenson-Shannon distance

E. None of these

Which of the following machine learning model deployment paradigms is the most common for machine learning projects?

A. On-device

B. Streaming

C. Real-time

D. Batch

E. None of these deployments

A data scientist would like to enable MLflow Autologging for all machine learning libraries used in a notebook. They want to ensure that MLflow Autologging is used no matter what version of the Databricks Runtime for Machine Learning is

used to run the notebook and no matter what workspace-wide configurations are selected in the Admin Console.

Which of the following lines of code can they use to accomplish this task?

A. mlflow.sklearn.autolog()

B. mlflow.spark.autolog()

C. spark.conf.set(“autologging”, True)

D. It is not possible to automatically log MLflow runs.

E. mlflow.autolog()

A data scientist has developed a model model and computed the RMSE of the model on the test set. They have assigned this value to the variable rmse. They now want to manually store the RMSE value with the MLflow run.

They write the following incomplete code block:

image9

Which of the following lines of code can be used to fill in the blank so the code block can successfully complete the task?

A. log_artifact

B. log_model

C. log_metric

D. log_param

E. There is no way to store values like this.

A machine learning engineer and data scientist are working together to convert a batch deployment to an always-on streaming deployment. The machine learning engineer has expressed that rigorous data tests must be put in place as a part

of their conversion to account for potential changes in data formats.

Which of the following describes why these types of data type tests and checks are particularly important for streaming deployments?

A. Because the streaming deployment is always on, all types of data must be handled without producing an error

B. All of these statements

C. Because the streaming deployment is always on, there is no practitioner to debug poor model performance

D. Because the streaming deployment is always on, there is a need to confirm that the deployment can autoscale

E. None of these statements

A machine learning engineering team wants to build a continuous pipeline for data preparation of a machine learning application. The team would like the data to be fully processed and made ready for inference in a series of equal-sized

batches.

Which of the following tools can be used to provide this type of continuous processing?

A. Spark UDFs

B. Structured Streaming

C. MLflow

D. Delta Lake

E. AutoML

A machine learning engineer wants to deploy a model for real-time serving using MLflow Model Serving. For the model, the machine learning engineer currently has one model version in each of the stages in the MLflow Model Registry. The

engineer wants to know which model versions can be queried once Model Serving is enabled for the model.

Which of the following lists all of the MLflow Model Registry stages whose model versions are automatically deployed with Model Serving?

A. Staging, Production, Archived

B. Production

C. None, Staging, Production, Archived

D. Staging, Production

E. None, Staging, Production

A data scientist has written a function to track the runs of their random forest model. The data scientist is changing the number of trees in the forest across each run. Which of the following MLflow operations is designed to log single values like the number of trees in a random forest?

A. mlflow.log_artifact

B. mlflow.log_model

C. mlflow.log_metric

D. mlflow.log_param

E. There is no way to store values like this.

Which of the following tools can assist in real-time deployments by packaging software with its own application, tools, and libraries?

A. Cloud-based compute

B. None of these tools

C. REST APIs

D. Containers

E. Autoscaling clusters

A data scientist has developed a scikit-learn model sklearn_model and they want to log the model using MLflow.

They write the following incomplete code block:

image14

Which of the following lines of code can be used to fill in the blank so the code block can successfully complete the task?

A. mlflow.spark.track_model(sklearn_model, "model")

B. mlflow.sklearn.log_model(sklearn_model, "model")

C. mlflow.spark.log_model(sklearn_model, "model")

D. mlflow.sklearn.load_model("model")

E. mlflow.sklearn.track_model(sklearn_model, "model")

Which of the following describes the purpose of the context parameter in the predict method of Python models for MLflow?

A. The context parameter allows the user to specify which version of the registered MLflow Model should be used based on the given application's current scenario

B. The context parameter allows the user to document the performance of a model after it has been deployed

C. The context parameter allows the user to include relevant details of the business case to allow downstream users to understand the purpose of the model

D. The context parameter allows the user to provide the model with completely custom if-else logic for the given application's current scenario

E. The context parameter allows the user to provide the model access to objects like preprocessing models or custom configuration files

A machine learning engineer wants to log and deploy a model as an MLflow pyfunc model. They have custom preprocessing that needs to be completed on feature variables prior to fitting the model or computing predictions using that model.

They decide to wrap this preprocessing in a custom model class ModelWithPreprocess, where the preprocessing is performed when calling fit and when calling predict. They then log the fitted model of the ModelWithPreprocess class as a

pyfunc model.

Which of the following is a benefit of this approach when loading the logged pyfunc model for downstream deployment?

A. The pyfunc model can be used to deploy models in a parallelizable fashion

B. The same preprocessing logic will automatically be applied when calling fit

C. The same preprocessing logic will automatically be applied when calling predict

D. This approach has no impact when loading the logged pyfunc model for downstream deployment

E. There is no longer a need for pipeline-like machine learning objects

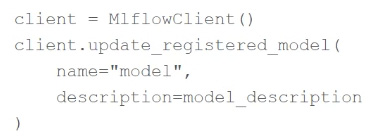

A machine learning engineering manager has asked all of the engineers on their team to add text descriptions to each of the model projects in the MLflow Model Registry. They are starting with the model project "model" and they'd like to add

the text in the model_description variable.

The team is using the following line of code:

Which of the following changes does the team need to make to the above code block to accomplish the task?

A. Replace update_registered_model with update_model_version

B. There no changes necessary

C. Replace description with artifact

D. Replace client.update_registered_model with mlflow

E. Add a Python model as an argument to update_registered_model

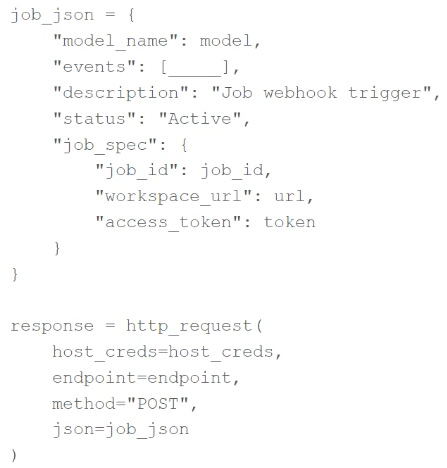

A machine learning engineer is attempting to create a webhook that will trigger a Databricks Job job_id when a model version for model model transitions into any MLflow Model Registry stage. They have the following incomplete code block:

Which of the following lines of code can be used to fill in the blank so that the code block accomplishes the task?

A. "MODEL_VERSION_CREATED"

B. "MODEL_VERSION_TRANSITIONED_TO_PRODUCTION"

C. "MODEL_VERSION_TRANSITIONED_TO_STAGING"

D. "MODEL_VERSION_TRANSITIONED_STAGE"

E. "MODEL_VERSION_TRANSITIONED_TO_STAGING", "MODEL_VERSION_TRANSITIONED_TO_PRODUCTION"

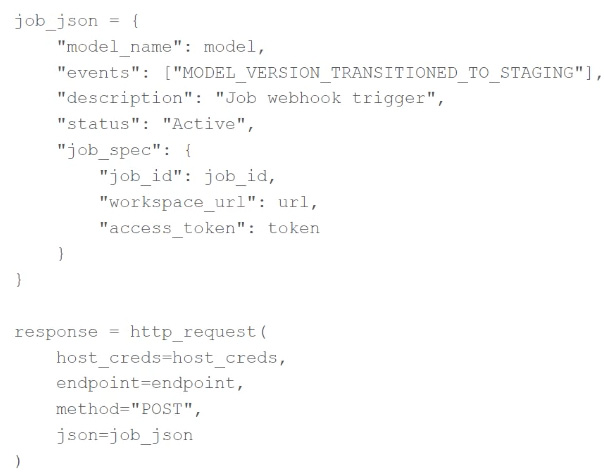

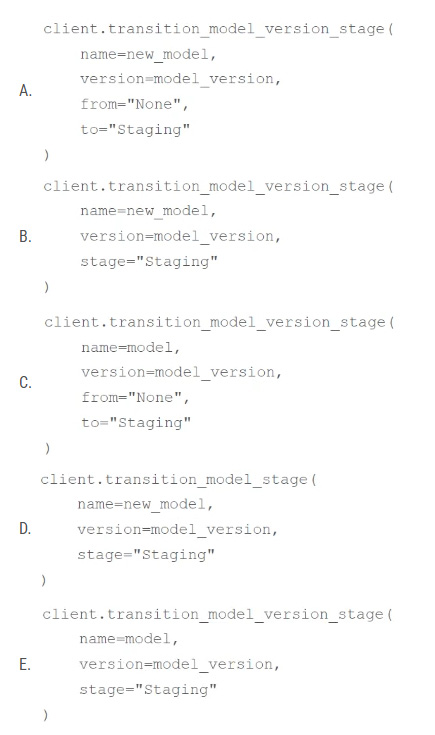

A machine learning engineer has created a webhook with the following code block:

Which of the following code blocks will trigger this webhook to run the associate job?

A. Option A

B. Option B

C. Option C

D. Option D

E. Option E