CCA-500 Online Practice Questions and Answers

Each node in your Hadoop cluster, running YARN, has 64GB memory and 24 cores. Your yarn.site.xml

has the following configuration:

You want YARN to launch no more than 16 containers per node. What should you do?

A. Modify yarn-site.xml with the following property:

B. Modify yarn-sites.xml with the following property:

C. Modify yarn-site.xml with the following property:

D. No action is needed: YARN's dynamic resource allocation automatically optimizes the node memory and cores

You are configuring your cluster to run HDFS and MapReducer v2 (MRv2) on YARN. Which two daemons needs to be installed on your cluster's master nodes? (Choose two)

A. HMaster

B. ResourceManager

C. TaskManager

D. JobTracker

E. NameNode

F. DataNode

You are running a Hadoop cluster with a NameNode on host mynamenode, a secondary NameNode on host mysecondarynamenode and several DataNodes.

Which best describes how you determine when the last checkpoint happened?

A. Execute hdfs namenode report on the command line and look at the Last Checkpoint information

B. Execute hdfs dfsadmin saveNamespace on the command line which returns to you the last checkpoint value in fstime file

C. Connect to the web UI of the Secondary NameNode (http://mysecondary:50090/) and look at the "Last Checkpoint" information

D. Connect to the web UI of the NameNode (http://mynamenode:50070) and look at the "Last Checkpoint" information

What does CDH packaging do on install to facilitate Kerberos security setup?

A. Automatically configures permissions for log files at and MAPRED_LOG_DIR/userlogs

B. Creates users for hdfs and mapreduce to facilitate role assignment

C. Creates directories for temp, hdfs, and mapreduce with the correct permissions

D. Creates a set of pre-configured Kerberos keytab files and their permissions

E. Creates and configures your kdc with default cluster values

You need to analyze 60,000,000 images stored in JPEG format, each of which is approximately 25 KB. Because you Hadoop cluster isn't optimized for storing and processing many small files, you decide to do the following actions:

1.

Group the individual images into a set of larger files

2.

Use the set of larger files as input for a MapReduce job that processes them directly with python using Hadoop streaming.

Which data serialization system gives the flexibility to do this?

A. CSV

B. XML

C. HTML

D. Avro

E. SequenceFiles

F. JSON

Which scheduler would you deploy to ensure that your cluster allows short jobs to finish within a reasonable time without starting long-running jobs?

A. Complexity Fair Scheduler (CFS)

B. Capacity Scheduler

C. Fair Scheduler

D. FIFO Scheduler

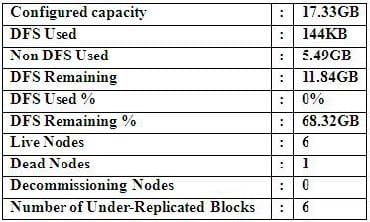

Cluster Summary:

45 files and directories, 12 blocks = 57 total. Heap size is 15.31 MB/193.38MB(7%)

Refer to the above screenshot.

You configure a Hadoop cluster with seven DataNodes and on of your monitoring UIs displays the details

shown in the exhibit.

What does the this tell you?

A. The DataNode JVM on one host is not active

B. Because your under-replicated blocks count matches the Live Nodes, one node is dead, and your DFS Used % equals 0%, you can't be certain that your cluster has all the data you've written it.

C. Your cluster has lost all HDFS data which had bocks stored on the dead DatNode

D. The HDFS cluster is in safe mode

Which YARN daemon or service negotiations map and reduce Containers from the Scheduler, tracking their status and monitoring progress?

A. NodeManager

B. ApplicationMaster

C. ApplicationManager

D. ResourceManager

On a cluster running CDH 5.0 or above, you use the hadoop fs put command to write a 300MB file into a previously empty directory using an HDFS block size of 64 MB. Just after this command has finished writing 200 MB of this file, what would another use see when they look in directory?

A. The directory will appear to be empty until the entire file write is completed on the cluster

B. They will see the file with a ._COPYING_ extension on its name. If they view the file, they will see contents of the file up to the last completed block (as each 64MB block is written, that block becomes available)

C. They will see the file with a ._COPYING_ extension on its name. If they attempt to view the file, they will get a ConcurrentFileAccessException until the entire file write is completed on the cluster

D. They will see the file with its original name. If they attempt to view the file, they will get a ConcurrentFileAccessException until the entire file write is completed on the cluster

Which command does Hadoop offer to discover missing or corrupt HDFS data?

A. Hdfs fs du

B. Hdfs fsck

C. Dskchk

D. The map-only checksum

E. Hadoop does not provide any tools to discover missing or corrupt data; there is not need because three replicas are kept for each data block

Your cluster is running MapReduce version 2 (MRv2) on YARN. Your ResourceManager is configured to use the FairScheduler. Now you want to configure your scheduler such that a new user on the cluster can submit jobs into their own queue application submission. Which configuration should you set?

A. You can specify new queue name when user submits a job and new queue can be created dynamically if the property yarn.scheduler.fair.allow-undecleared-pools = true

B. Yarn.scheduler.fair.user.fair-as-default-queue = false and yarn.scheduler.fair.allow- undecleared-pools = true

C. You can specify new queue name when user submits a job and new queue can be created dynamically if yarn .schedule.fair.user-as-default-queue = false

D. You can specify new queue name per application in allocations.xml file and have new jobs automatically assigned to the application queue

You use the hadoop fs put command to add a file "sales.txt" to HDFS. This file is small enough that it fits into a single block, which is replicated to three nodes in your cluster (with a replication factor of 3). One of the nodes holding this file (a single block) fails. How will the cluster handle the replication of file in this situation?

A. The file will remain under-replicated until the administrator brings that node back online

B. The cluster will re-replicate the file the next time the system administrator reboots the NameNode daemon (as long as the file's replication factor doesn't fall below)

C. This will be immediately re-replicated and all other HDFS operations on the cluster will halt until the cluster's replication values are resorted

D. The file will be re-replicated automatically after the NameNode determines it is under- replicated based on the block reports it receives from the NameNodes

Your cluster has the following characteristics:

A rack aware topology is configured and on

Replication is set to 3

Cluster block size is set to 64MB

Which describes the file read process when a client application connects into the cluster and requests a 50MB file?

A. The client queries the NameNode for the locations of the block, and reads all three copies. The first copy to complete transfer to the client is the one the client reads as part of hadoop's speculative execution framework.

B. The client queries the NameNode for the locations of the block, and reads from the first location in the list it receives.

C. The client queries the NameNode for the locations of the block, and reads from a random location in the list it receives to eliminate network I/O loads by balancing which nodes it retrieves data from any given time.

D. The client queries the NameNode which retrieves the block from the nearest DataNode to the client then passes that block back to the client.

You have a cluster running with a FIFO scheduler enabled. You submit a large job A to the cluster, which

you expect to run for one hour. Then, you submit job B to the cluster, which you expect to run a couple of

minutes only.

You submit both jobs with the same priority.

Which two best describes how FIFO Scheduler arbitrates the cluster resources for job and its tasks?

(Choose two)

A. Because there is a more than a single job on the cluster, the FIFO Scheduler will enforce a limit on the percentage of resources allocated to a particular job at any given time

B. Tasks are scheduled on the order of their job submission

C. The order of execution of job may vary

D. Given job A and submitted in that order, all tasks from job A are guaranteed to finish before all tasks from job B

E. The FIFO Scheduler will give, on average, and equal share of the cluster resources over the job lifecycle

F. The FIFO Scheduler will pass an exception back to the client when Job B is submitted, since all slots on the cluster are use

Your company stores user profile records in an OLTP databases. You want to join these records with web server logs you have already ingested into the Hadoop file system. What is the best way to obtain and ingest these user records?

A. Ingest with Hadoop streaming

B. Ingest using Hive's IQAD DATA command

C. Ingest with sqoop import

D. Ingest with Pig's LOAD command

E. Ingest using the HDFS put command